DO'T BE EVIL? LETHAL AUTONOMOUS WEAPONS AND ARTIFICIAL INTELLIGENCE. A SURVEY OF THE TECH SECTOR'S STANCE

PAX

We bring to the attention our readers excerpts from an important study on the development of autonomous weapons largely guided by artificial intelligence. The report entitled Don’t be evil? A survey of the tech sector’s stance on lethal autonomous weapons addresses weapon systems with increasing levels of autonomy.

Needless to say artificial intelligence (AI) which is already embedded in conventional and strategic weapons systems modifies the nature of modern warfare.

To consult the full report click here.

The text below which consists of the Executive Summary and the Introduction provides a broad overview.

The development of lethal autonomous weapons has raised deep concerns and has triggered an international debate regarding the desirability of these weapons. Lethal autonomous weapons, popularly known as killer robots, would be able to select and attack individual targets without meaningful human control. This report analyses which tech companies could potentially be involved in the development of these weapons. It highlights areas of work that are relevant to the military and have potential for applications in lethal autonomous weapons, specifically in facilitating the autonomous selection and attacking of targets. Companies have been included in this report because of links to military projects and/or because the technology they develop could potentially be used in lethal autonomous weapons.

Lethal autonomous weapons

Artificial intelligence (AI) has the potential to make many positive contributions to society. But in order to realize its potential, it is important to avoid the negative effects and backlashes from inappropriate use of AI. The use of AI by militaries in itself is not necessarily problematic, for example when used for autonomous take-off and landing, navigation or refueling. However the use of AI to allow weapon systems to autonomously select and attack targets is highly controversial. The development of these weapons would have an enormous effect on the way war is conducted. It has been called the third revolution in warfare, after gunpowder and the atomic bomb. Many experts warn that these weapons would violate fundamental legal and ethical principles and would destabilize international peace and security. In particular, delegating the decision over life and death to a machine is seen as deeply unethical.

The autonomous weapons debate in the tech sector

In the past few years, there has been increasing debate within the tech sector about the impact of new technologies on our societies. Concerns related to privacy, human rights and other issues have been raised. The issue of weapon systems with increasing levels of autonomy, which could lead to the development of lethal autonomous weapons, has also led to discussions within the tech sector. For example, protests by Google employees regarding the Pentagon project Maven led to the company installing a policy committing to not design or deploy AI in “weapons or other technologies whose principal purpose or implementation is to cause or directly facilitate injury to people”. Also more than 240 companies and organisations, and more than 3,200 individuals have signed a pledge to never develop, produce or use lethal autonomous weapon systems.

Tech companies have a social responsibility to ensure that the rapid developments in artificial intelligence are used for the benefit of humankind. It is also in a company’s own interest to ensure it does not contribute to the development of these weapons as this could lead to severe reputational damage. As Google Cloud CEO Diane Green said, “Google would not choose to pursue Maven today because the backlash has been terrible for the company”.

The tech sector and increasingly autonomous weapons

A number of technologies can be relevant in the development of lethal autonomous weapons. Companies working on these technologies need to be aware of that potential in their technology and they need to have policies that make explicit how and where they draw the line regarding the military application of their technologies. The report looks at tech companies from the following perspectives:

- Big tech

- Hardware

- AI software and system integration

- Pattern recognition

- Autonomous (swarming) aerial systems

- Ground robots

Level of concern

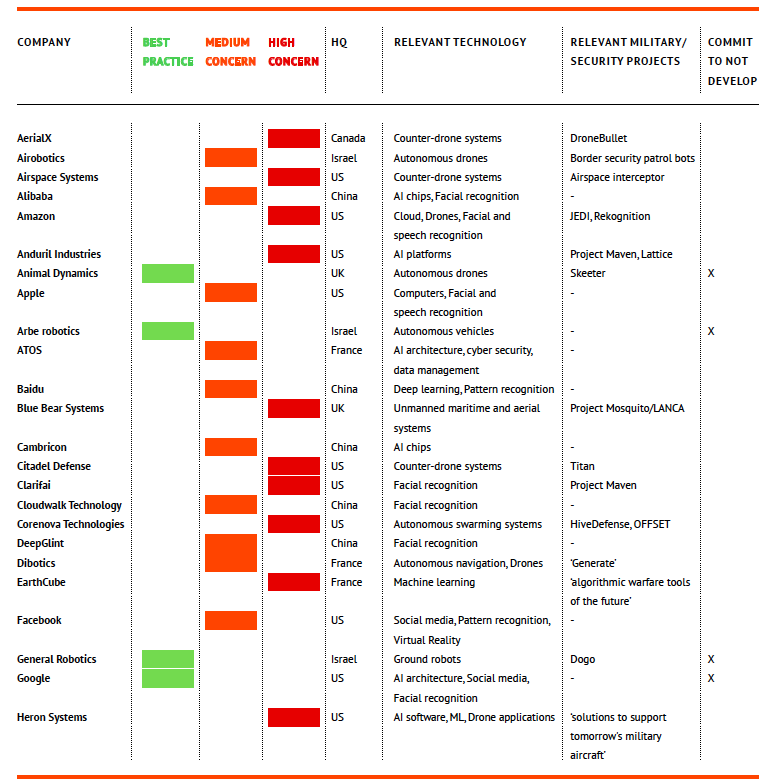

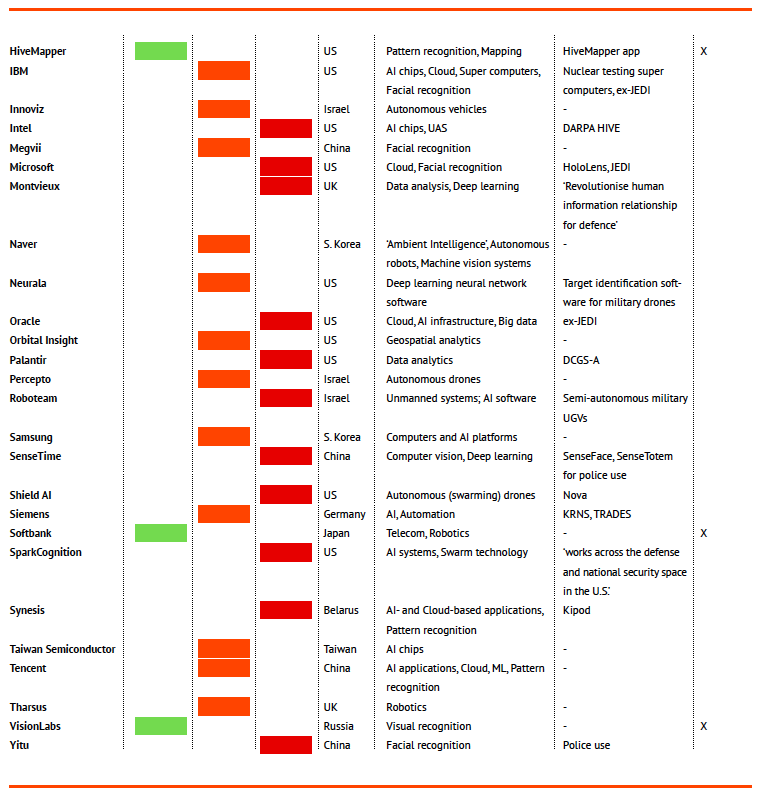

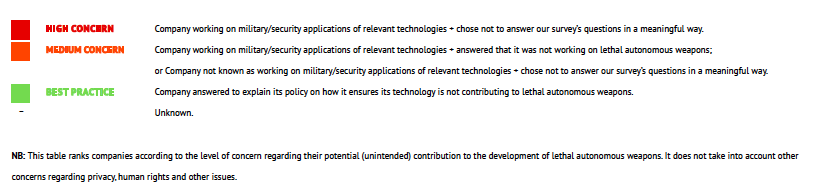

Fifty companies from 12 countries, all working on one or more of the technologies mentioned above, were selected and asked to participate in a short survey, asking them about their current activities and policies in the context of lethal autonomous weapons. Based on this survey and our own research PAX has ranked these companies based on three criteria:

- Is the company developing technology that could be relevant in the context of lethal autonomous weapons?

- Does the company work on relevant military projects?

- Has the company committed to not contribute to the development of lethal autonomous weapons?

Based on these criteria, seven companies are classified as showing ‘best practice’, 22 as companies of ‘medium concern’, and 21 as ‘high concern’. To be ranked as ‘best practice’ a company must have clearly committed to ensuring its technology will not be used to develop or produce autonomous weapons. Companies are ranked as high concern if they develop relevant technology, work on military projects and have not yet committed to not contributing to the development or production of these weapons.

Recommendations

This is an important debate. Tech companies need to decide what they will and will not do when it comes to military applications of artificial intelligence. There are a number of steps that tech companies can take to prevent their products from contributing to the development and production of lethal autonomous weapons.

- Commit publicly to not contribute to the development of lethal autonomous weapons.

- Establish a clear policy stating that the company will not contribute to the development or production of lethal autonomous weapon systems.

- Ensure employees are well informed about what they work on and allow open discussions on any related concerns.

Companies have been ranked by levels of concern. The ranking was based on three criteria:

1. Is the company developing technology that could be relevant in the context of lethal autonomous weapons?

2. Does the company work on relevant military projects?

3. Has the company committed to not contribute to the development of lethal autonomous weapons?

Introduction to the Report

Artificial Intelligence (AI) is progressing rapidly and has enormous potential for helping humanity in countless ways, from improving healthcare to lifting people out of poverty, and helping achieve the United Nations Sustainable Development Goals – if deployed wisely.[1] In recent years, there has been increasing debate within the tech sector about the impact of AI on our societies, and where to draw the line between acceptable and unacceptable uses. Concerns related to privacy, human rights and other issues have been raised. The issue of weapon systems with increasing levels of autonomy, which could lead to lethal autonomous weapons, has also led to strong discussions within the tech sector.

In reaction to a project with the Pentagon, Google staff signed an open letter saying “We believe that Google should not be in the business of war”.[2] Following the controversy Google published its AI principles, “which include a commitment to not pursue AI applications for weapons”.[3]

Microsoft employees responded to the company’s efforts to participate in another US military contract by affirming that they worked at Microsoft in the hope of empowering “every person on the planet to achieve more, not with the intent of ending lives and enhancing lethality”.[4]

In 2014, Canadian company Clearpath Robotics was the first company committing not to contribute to the development of lethal autonomous weapons. It said: “This technology has the potential to kill indiscriminately and to proliferate rapidly; early prototypes already exist. Despite our continued involvement with Canadian and international military research and development, Clearpath Robotics believes that the development of killer robots is unwise, unethical, and should be banned on an international scale”.[5]

In order to realize the great above-mentioned potential for AI to make the world better, it is important to avoid the negative effects and backlashes from inappropriate AI use. The use of AI by militaries is not necessarily problematic, for example for autonomous take-off and landing, navigation or refueling. However, the development of lethal autonomous weapons, which could select and attack targets on their own, has raised deep concerns and triggered heated controversy.

This is an important debate in which tech companies play a key role. To ensure that this debate is as fact-based and productive as possible, it is valuable for tech companies to articulate and publicise clear policies on their stance, clarifying where they draw the line between what AI technology they will and will not develop.

Concerns about Lethal Autonomous Weapons

Lethal autonomous weapon systems are weapons that can select and attack individual targets without meaningful human control.[6] This means that the decision to use lethal force is delegated to a machine, and that an algorithm can decide to kill humans. The function of autonomously selecting and attacking targets could be applied to various autonomous platforms, for instance drones, tanks, fighter jets or ships. The development of such weapons would have an enormous effect on the way war is conducted and has been called the third revolution in warfare, after gunpowder and the atomic bomb.[7]

Many experts warn that lethal autonomous weapons would violate fundamental legal and ethical principles and would be a destabilising threat to international peace and security. Moral and ethical concerns have centred around the delegation of the kill decision to an algorithm. Legal concerns are related to whether lethal autonomous weapons could comply with international humanitarian law (IHL, also known as the law of war), more specifically whether they could properly distinguish between civilians and combatants and make proportionality assessments.[8] Military and legal scholars have pointed out an accountability vacuum regarding who would be held responsible in the case of an unlawful act.[9]

Others have voiced concerns that lethal autonomous weapons would be seriously destabilizing and threaten international peace and security. For example, by enabling risk-free and untraceable attacks they could lower the threshold to war and weaken norms regulating the use of force. Delegating decisions to algorithms could result in the pace of combat exceeding human response time, creating the danger of rapid conflict escalation. Lethal autonomous weapons might trigger a global arms race where they will become mass-produced, cheap and ubiquitous since, unlike nuclear weapons, they require no hard-to-obtain raw materials. They might therefore proliferate to a large number of states and end up in the hands of criminals, terrorists and warlords. Sized and priced smartphones, lethal drones with GPS and facial recognition might enable anonymous political murder, ethnic cleansing or acts that even loyal soldiers would refuse to carry out. Algorithms might target specific groups based on sensor data such as perceived age, gender, ethnicity, dress code, or even place of residence or worship. Experts also warn that “the perception of a race will prompt everyone to rush to deploy unsafe AI systems”.[10]

“Because they do not require individual human supervision, autonomous weapons are potentially scalable weapons of mass destruction; an essentially unlimited number of such weapons can be launched by a small number of people. This is an inescapable logical consequence of autonomy”, wrote Stuart Russell, computer science professor at the University of California in Berkeley.[11] Therefore, “pursuing the development of lethal autonomous weapons would drastically reduce international, national, local, and personal security”.[12] Decades ago, scientists used a similar argument to convince presidents Lyndon Johnson and Richard Nixon to renounce the US biological weapons programme and ultimately bring about the Biological Weapons Convention.

Twenty eight states, including Austria, Brazil, China, Egypt, Mexico and Pakistan, have so far called for a ban, and most states agree that some form of human control over weapon systems and the use of force is required.[13] UN Secretary-General António Guterres has called lethal autonomous weapons “morally repugnant and politically unacceptable”, urging states to negotiate a ban on these weapons. The International Committee of the Red Cross (ICRC) has called on states to establish internationally agreed limits on autonomy in weapon systems that address legal, ethical and humanitarian concerns. The Campaign to Stop Killer Robots, a coalition of over a hundred civil society organisations across 54 countries, aims to stop the development and use of fully autonomous weapons through an international treaty. An IPSOS poll in 26 countries shows that 61 per cent of respondents oppose lethal autonomous weapons. Two-thirds answered that such weapons would “cross a moral line because machines should not be allowed to kill”.[14]

This Report

This report analyses developments in the tech sector, pointing to areas of work that are highly relevant to the military and have potential for applications in lethal autonomous weapons, specifically in facilitating the autonomous selection and attack of targets. While certain technologies may well ensure sufficient human control over a weapon’s use, it is often unclear what this entails and how this is ensured. Similarly, certain technologies may be intended for uncontroversial uses that do not cause harm, but it is often unclear how companies ensure their technology will not be used for lethal applications, and especially not for autonomous weapons.

Whereas military production in the past was naturally the domain of the arms industry, with the emergence of the digital era, the tech sector has become increasingly involved. Thus this report analyses the connections between the public and private sectors in the area of military technology with increasingly autonomous capabilities.

The research is based on information available in the public domain, either from company websites or from trusted media. PAX also sent out a survey to 50 companies in the tech sector that we deemed relevant because of their (actual or potential) connections with the military, as a development partner and/or as a supplier of specific products. The survey asked companies about their awareness of the debate around autonomous weapons, whether the company has an official position regarding these weapons, and whether they have a policy to reflect this position (See ‘Annex: Survey Questions’). These companies have been ranked based on three criteria

- Is the company developing technology that could be relevant in the context of lethal autonomous weapons?

- Does the company work on relevant military projects?

- Has the company committed to not contribute to the development of lethal autonomous weapons?

This report is not intended to be an exhaustive overview of such activities, nor of the tech sector itself; rather, it covers a relevant range of products and companies to illustrate the role of this sector in the development of increasingly autonomous weapons. This role brings a responsibility for tech companies to be mindful of the potential applications of certain technologies and possible negative effects when applied to weapon systems.

Many emerging technologies are dual-use and have clear peaceful uses. In the context of this report, the concern is with products that could potentially also be used in lethal autonomous weapons. Moreover, there is the worry that unless companies develop proper policies, some technologies not intended for battlefield use may ultimately end up being used in weapon systems.

The development of lethal autonomous weapons takes place in a wide spectrum, with levels of technology varying from simple automation to full autonomy, and being applied in different weapon systems’ functionalities. This has raised concerns of a slippery slope where the human role is gradually diminishing in the decision-making loop regarding the use of force, prompting suggestions that companies, through their research and production, must help guarantee meaningful human control over decisions to use force.

Click here to read the full report.

*

https://www.globalresearch.ca/survey-tech-sectors-stance-lethal-autonomous-weapons/5687251